Deploy Pytorch Model with Okteto CLI

In this article, you will be deploying an already built model for the public that generates unique and personalized love letters with no plagiarism to send to their partners or crush.

Effective deployment of machine learning models is more of an art than science.

Text generation model with Pytorch

Text generation is a Natural Language Generation application that generates text. This application (also called a model) is trained with text and uses text to generate semantic and coherent text. It is also trained to learn the likelihood of a word based on the previous sequence of words used in the text. So we are going to clone a text generation model built with PyTorch and deploy it on okteto.

We'll start by cloning the repository housing the model:

$ git clone https://github.com/Emekaborisama/Text-Generation-with-pytorch.git

I have trained the model and deployed it using Flask API. You can find the training script in the train folder, the inference, and the API script in the app folder.

When you clone the repository, you will notice the folder arrangement, which I recommend for anyone deploying a machine learning model with Flask and Okteto.

Next, navigate to the text-generation folder, and install the required packages:

$ cd Text-Generation-with-pytorch

$ pip install -r requirements.txt

Once the installation is complete, verify that the model works by running the command:

$ python3 main.py

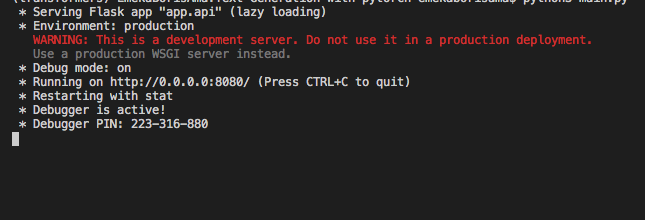

The command above should return an output similar to the one below:

Now, let's deploy the model to Okteto.

Deploying to Okteto

Okteto is a developer platform used to accelerate the development workflow of cloud-native applications. You can make deployments to Okteto either through Okteto UI or through Okteto CLI.

Okteto Cloud

Okteto Cloud gives instant access to secure Kubernetes namespaces to enable developers to code, build, and run Kubernetes applications entirely in the cloud. With Okteto cloud, you can deploy your Machine Learning model with just a click of a button.

OKteto CLI

On the other hand, the CLI tool lets you develop your Cloud Native applications directly in Kubernetes. With the Okteto CLI, you can code locally in your preferred IDE, and Okteto will automatically sync your changes.

To install Okteto CLI, use the command below:

$ brew install okteto

Not using MacOS? Check out the guide for installing on your OS on the installation page.

Deploying to Okteto

Before using the Okteto CLI, you need to log into the service to allow you to create, delete, push and synchronize changes from your local machine. You only need to do this once.

Configure your Okteto CLI context to Okteto Cloud:

okteto context use https://cloud.okteto.com

A browser tab will open automatically, confirming that you have now logged in. If this the first time that you use Okteto, it will ask you to log in with your GitHub identity.

After configuring your okteto context, we will create a manifest file okteto-stack.yaml. This file defines our application configuration using a docker-compose like format.

$ touch okteto.stack.yaml

Stacks is made for developers who don't want to deal with the complexities of Kubernetes manifests.

Add the following to your okteto-stack.yaml file:

name: textgen

services:

textgeneration:

public: true

image: okteto.dev/text_generation_with_pytorch

build: .

replicas: 1

ports:

- 8080

resources:

cpu: 2000m

memory: 4280Mi

With the stack file in place, deploy the model to Okteto using the command:

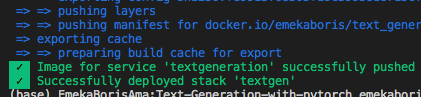

$ okteto stack deploy --build

This command containerizes your application, builds your application based on the instructions in the okteto-stack.yaml file, and then deploys the application.

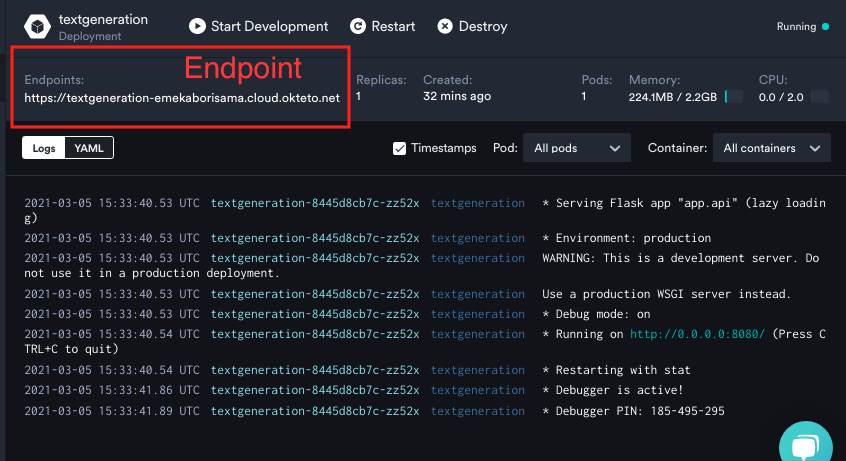

Now we have deployed our PyTorch generation model. The deployment command starts an application on your dashboard:

With the application deployed, let's generate a love letter from our terminal by sending a POST request to the /lovelettergen endpoint using CURL:

$ curl --location --request POST 'http://textgen-emekaborisama.cloud.okteto.net/lovelettergen' \

--form 'content="sweet heart"'

The response from the command above:

finding happy. really, especially, up take calling want funny kingdom you'll deeply. send away making thrilled also, care elements sad, wife. enjoyable better whereby hanging count times. felt. nicest else; kisses constant

Conclusion

In this article, we have learned how to deploy a text generation PyTorch model with Flask and Okteto CLI. Okteto stacks make it super easy to deploy Machine Learning APIs on Kubernetes.

Our next article will focus on deploying the model on Okteto Cloud UI from the GitHub repository.

Arsh Sharma

Arsh Sharma