Building a Machine Learning Application with spaGO and Okteto Cloud

Author bio: Sangam is Developer Advocate at Accurics. He's also a Docker Community Leader Award Winner and an Okteto and Traefik Community Ambassador. You can reach out to him on Twitter to connect and chat more about Cloud Native applications!

I've always marveled at the power of machine learning. There are so many manual tasks that are now super simple to automate, thanks to ML algorithms! While researching this topic, I recently discovered spaGO and was blown away by how cool and powerful it is.

This post will show you how you can use spaGO and Okteto Cloud to build and run a service that answers questions written in English.

What is spaGO?

spaGO is a machine learning library written in pure Go, with a focus on natural language processing. It is open-source, and Matteo Graella created it. It uses its own lightweight computational graph framework for both training and inference, making it easy to understand from start to finish.

The self-contained nature of spaGO also makes it easy to run as a container, making it perfect for running in Okteto.

What is Okteto?

Okteto is a developer platform used to accelerate the development workflow of cloud-native applications. We'll use Okteto to build and deploy our spaGO service.

If this is the first time you use Okteto, go ahead and sign up for a free Okteto Cloud account, we'll need it in the next step.

Initial Setup

Start by cloning the spaGO GitHub repository in your local machine.

git clone https://github.com/nlpodyssey/spago/

To deploy our spaGO service in Okteto, we need a container. The repository already has a Dockerfile. The Dockerfile has all the necessary instructions to build and start a spaGO application. Let's go ahead and build it.

Traditionally, we would be using docker to build this locally and then Dockerhub to store our image. Instead of that, we're going to take advantage of two cool features of Okteto: its build and registry services.

You need to install the Okteto CLI to use them. I'm on a Mac, so all I need to do is run brew install okteto. Check out their installation docs to learn how to install it in other operative systems.

Once you install the Okteto CLI, run okteto context to configure your Okteto CLI context to Okteto Cloud.

okteto context use https://cloud.okteto.com

✓ Using context cindy @ cloud.okteto.com

i Run 'okteto kubeconfig' to set your kubectl credentials

You can now use the okteto build command to build the image and push it to Okteto's registry, as shown below:

cd spago

okteto build -t okteto.dev/spago

i Running your build in tcp://buildkit.cloud.okteto.net:1234...

...

...

✓ Image 'okteto.dev/spago' successfully pushed

We now have a container that we can use to run our spaGO application in. But why do we need to do this instead of running it locally?

Why use a container for machine learning development?

Having our spaGO application in a container allows us to run it anywhere. We can run it locally if we want to. But more importantly, we can now run it in the cloud.

Running machine learning applications in the cloud gives us a lot of benefits, such as:

- Access to as much memory and CPU as we need.

- Access to high-performance CPU and, more importantly, GPUs.

- Unlimited storage.

- Fast access to data, since our local connection no longer limits us.

Deploy your spaGO application in the cloud

If you look closely at spaGO's Dockerfile, you'll notice that it includes a CLI. This CLI allows us to run the pre-trained models that spaGO has. Today, we're going to be using the "Question Answering" model. The "Question Answering" program uses the best-based-case model from Huggingface.

To deploy our application, we'll be using Okteto Stacks. Okteto Stacks is an application format similar to docker-compose that Okteto created for developers who don't want to deal with the complexities of Kubernetes manifests or Helm charts.

Start by creating a stack manifest file for your application:

touch okteto-stack.yaml

In the okteto-stack.yaml file, define your application:

name: spago

services:

spago:

public: true

image: okteto.dev/spago

build: .

ports:

- 1987

command:

- /docker-entrypoint

- bert-server

- server

- --repo=/spago

- --model=deepset/bert-base-cased-squad2

- --tls-disable

resources:

storage: 4Gi

memory: 4Gi

cpu: 800m

volumes:

- /spago

Let's take a look at the critical parts of the manifest:

name: The name of your stack services: The services to deploy. In this case, it's only one.public: This tells Okteto to create an HTTPs endpoint for your application.image: The container image to use (in this case, the one we created in the previous step)build: The path to the container's build context.command: The command to run in the container. In this case, we're running the spaGO cli with the Question Answering" model.ports: The ports of your service resources: The resources your service needs to run.volumes: The folders in your container you want persisting. In this case, we are using it to store our model information, so the model is not downloaded every time you redeploy or restart your application.

Run the command to deploy your spaGO application in Okteto Cloud:

okteto stack deploy --wait

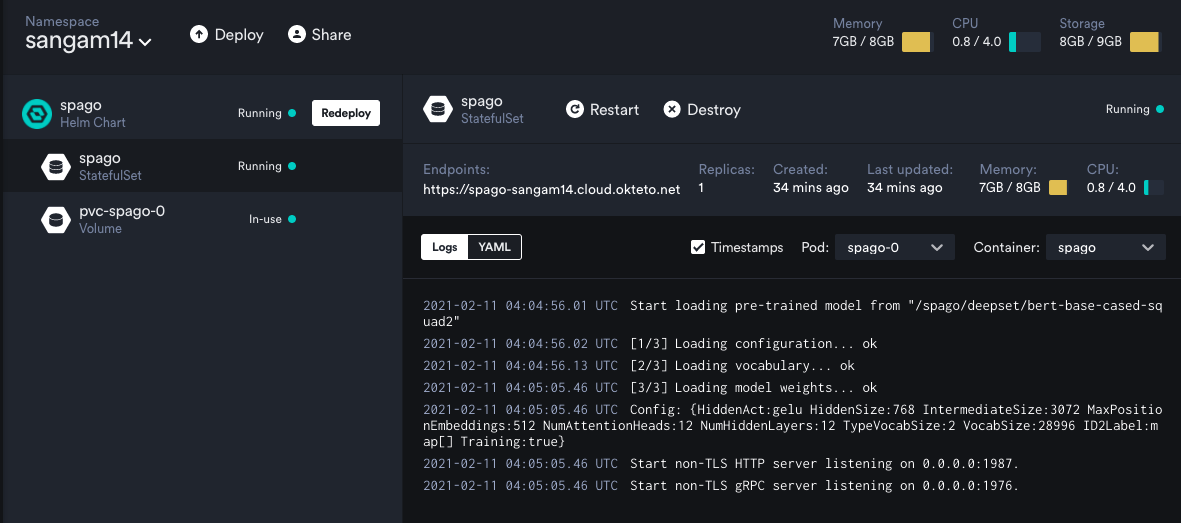

The command above will wait until your application is ready. Once it finishes, navigate to Okteto Cloud's dashboard. There you will see the application, the logs, and the public endpoint.

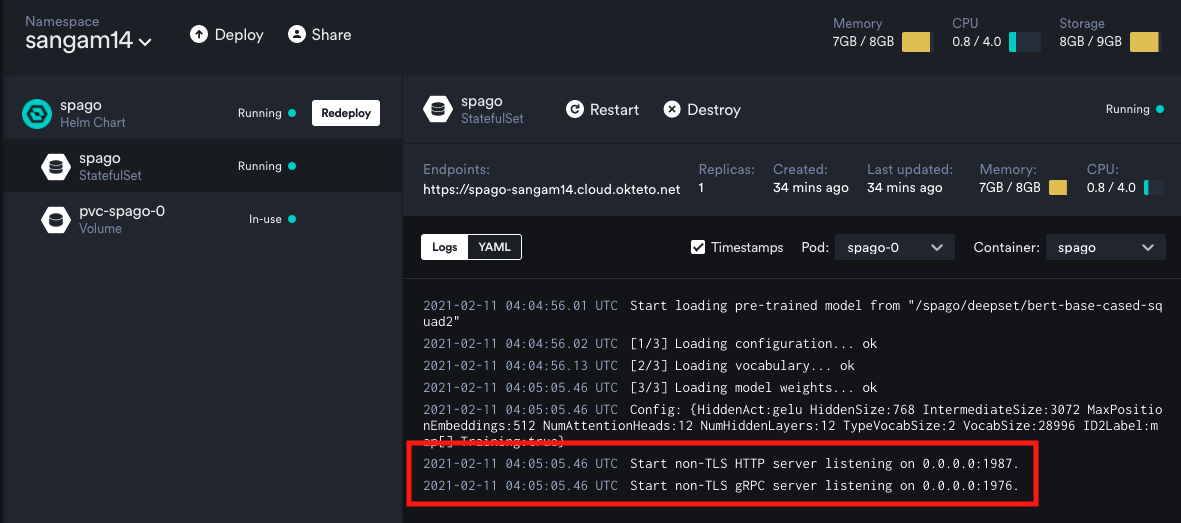

Check the logs of your application, and wait for the model to finish loading (it'll take a minute or two).

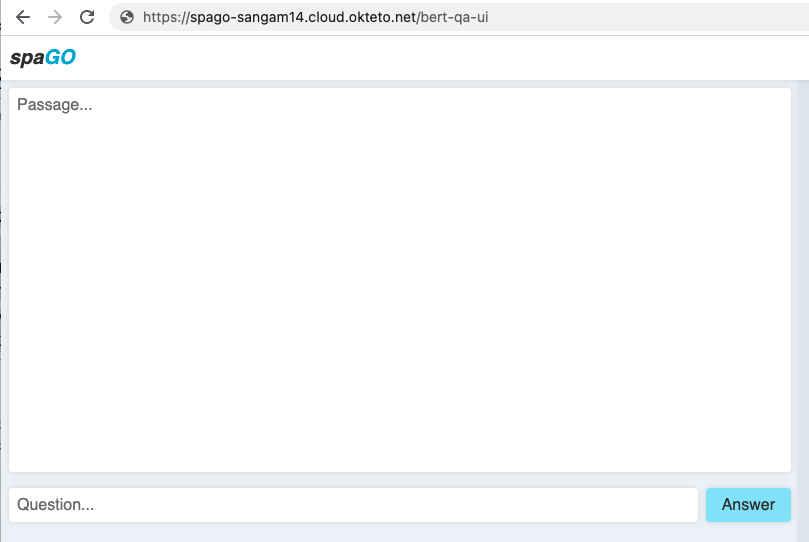

Then go to the spago-sangam14.cloud.okteto.net/bert-qa-ui endpoint (change it to match the address displayed on your dashboard) to access the UI of the service.

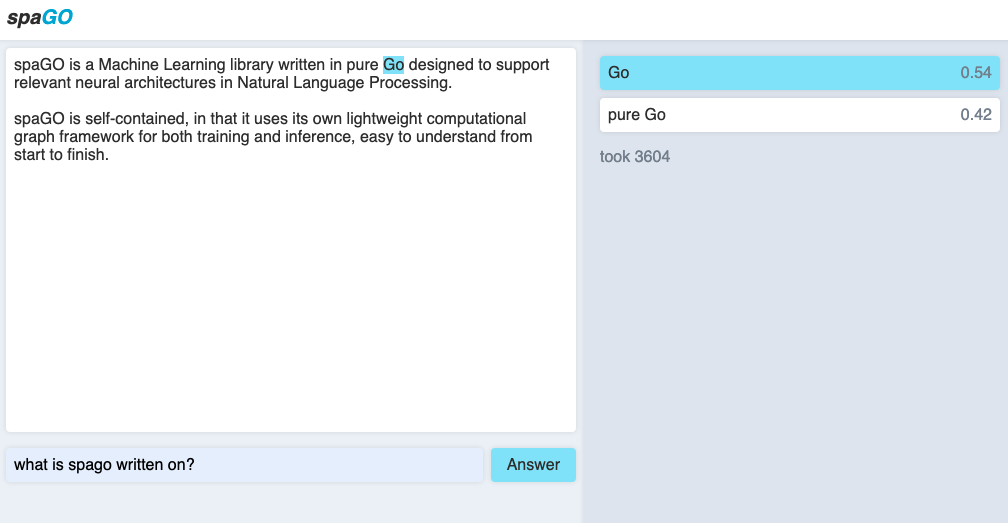

Here is where we can ask the service questions. First, let's give it a passage. For this example, I'm going to go with this text from the spaGO repository:

spaGO is a Machine Learning library written in pure Go designed to support relevant neural architectures in Natural Language Processing. spaGO is self-contained, in that it uses its own lightweight computational graph framework for both training and inference, easy to understand from start to finish.

And now, ask a question. For instance, "What is spaGO written on?".

Cool no? Why don't you go ahead and try a few more things?

Conclusion

I enjoy how easy it is to deploy a model with spaGO and Okteto. Build a container, deploy a stack, and you almost have a fully functional NLP system. The possibilities are endless!

I hope you find this example useful. I recommend you check out my Docker meetup talk on this topic, where I cover more examples of spaGO. And don't forget to reach out on Twitter to share what you are building with spaGO and Okteto!

Arsh Sharma

Arsh Sharma